From solutions for diabetes sufferers to those affected by ALS: some examples of how technology can improve our lives, lower the cost of care and make it accessible to more people.

2020 opened with the news that artificial intelligence (indeed, one of the artificial intelligence) developed by Google has become very good at identifying breast cancer, even better than human colleagues.

This is just one example, the last in order of time, of how better and better software (because that’s what it’s all about) can help humans in many fields. Like the medical one, in fact: last November it emerged (not without some controversy) that Big G had established an agreement with Ascension, the American healthcare giant, to collect and digitize the data of tens of millions of patients in almost 3,000 hospitals. Why? To give this enormous amount of data to her Ia, to make her read all the medical records, the results of tests and blood tests, to show her the X-rays, so that she could learn which symptom corresponds to which disease, what that disorder indicates, which means that that particular value is “high” … all to then help human doctors to arrive at a diagnosis with incredible precision, based on stasis and precedents.

Incredible as it may seem, in the hyper-fast world of technology this is already the past: the future of this kind of technology is in practical applications, useful in everyday life. And once again it’s the Mountain View giant that shows the way, as it’s clear from the 4 episodes documentary “The Age of A.I.”, presented on YouTube Originals by the actor Robert Downey Jr.: in particular, in episodes 2 and 3 (video below) the relationship between health care and artificial intelligence is deepened.

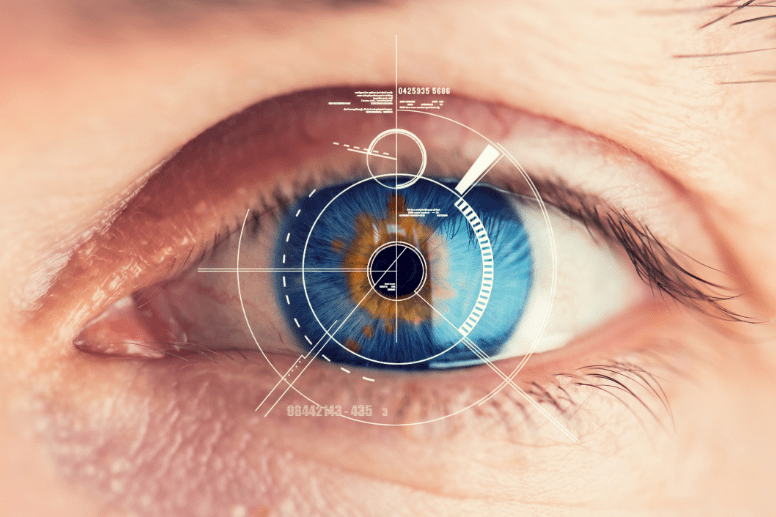

An “optical robot” to find retinopathies

First of all, prevention: Verily, a small company born under the semi-secret Google X division, which deals with Big G’s experimental projects, has worked together with Taiwanese Crystalvue to develop an “intelligent” retinal scan machine to be used in some hospitals in India.

Again: why? Because there is a very high incidence of diabetes in the country (it affects about 70 million people out of 400 million in the world), which can cause vision problems that if not diagnosed and treated in time can lead to blindness. The problem is that in the early stages retinopathy is asymptomatic, and to detect it is necessary to perform a retinal scan. Which in India is practically impossible to do: in the country, there are 11 ophthalmologists for every million people (in the United States they are 7 times as many) and every day they visit more than 2 thousand patients. There is not physical enough time for each person to undergo the tests they would need, wait for the report, make the diagnosis. What Google did was to acquire images of over 100 thousand retinal scans, which doctors have voluntarily provided and graduated from 1 to 5 depending on the severity of the disorder, and then submit them to his Ia, which through a process of “image recognition” (the same that allows Facebook to know the name of that friend who appears in our image) has learned to recognize even the smallest signs of retinopathy.

Treatment with the Ia (from minute 16:00, the retinal scan)

Crystalvue’s machine makes a scan of both eyes, compares the result with what it has learned and in a few seconds provides a report and advises what to do; the final opinion is obviously up to the doctor, but the device works as a sort of filter, separating serious patients from non-serious ones. And it does so very quickly, at a pace that no human could ever keep. What’s more: in the long run, it also lowers the costs of this type of examination, making treatment and diagnosis more affordable. And again: according to Google, the same, identical machine, always “reading” people’s retina is slowly learning to identify possible blood circulation problems, which could lead to heart disease in the future. Preventing these as well.

A machine to render the voice to the SLA sufferers

Also at the software level, a Google team worked closely with Tim Shaw, a former American football player, on the Euphonia project, which could help patients with amyotrophic lateral sclerosis in the future. Shaw’s story is beautiful and terrible: in 2013, at the height of his career, after playing for 6 years among the Nfl professionals, he was diagnosed with ALS; the disease, causing voluntary and involuntary muscle degeneration, obviously had consequences also on his ability to speak.

By making DeepMind, his most famous artificial intelligence, listen to hundreds and hundreds of interviews given by Shaw in his years as a linebacker, Google has created a voice recognizer able not only to understand the player’s words despite his current difficulties.

Leave a Reply